Today, computer experiments play a very important role in science. In the past, physical sciences were characterized by an interplay between experiment and theory. In theory, a model of the system is constructed, usually in the form of a set of mathematical equations. This model is then validated by its ability to describe the system behavior in a few selected cases, simple enough to allow a solution to be computed from the equations. One might wonder why one does not simply derive all physical behavior of matter from an as small as possible set of fundamental equations, e.g. the Dirac equation of relativistic quantum theory.

However, the quest for the fundamental principles of physics is not yet finished; thus, the appropriate starting point for such a strategy still remains unclear. But even if we knew all fundamental laws of nature, there is another reason, why this strategy does not work for ultimately predicting the behavior of matter on any length scale, and this reason is the growing complexity of fundamental theories – which are based on the dynamics of particles – when they are applied to systems of macroscopic (or even microscopic) dimensions. In almost all cases, even for academic problems involving only a few particles, a strict analytical solution is not possible and solving the problem very often implies a considerable amount of simplification. In contrast to this, in experiments, a system is subject to measurements, and results are collected, very often in the form of large data sets of numbers from which one strives to find mathematical equations describing the data by generalization, imagination and by thorough investigation.

Very rarely, normally based on symmetries which allow inherent simplifications of the original problem, is an analytical solution at hand which describes exactly the evidence of the experiment given by the obtained data sets. Unfortunately, many academic and practical physical problems of interest do not fall under this category of “simple” problems, e.g. disordered and many particle systems, where there is no symmetry which helps to simplify the treatment. In addition,many different properties are determined by structural hierarchies and processes on very different length- and associated timescales.

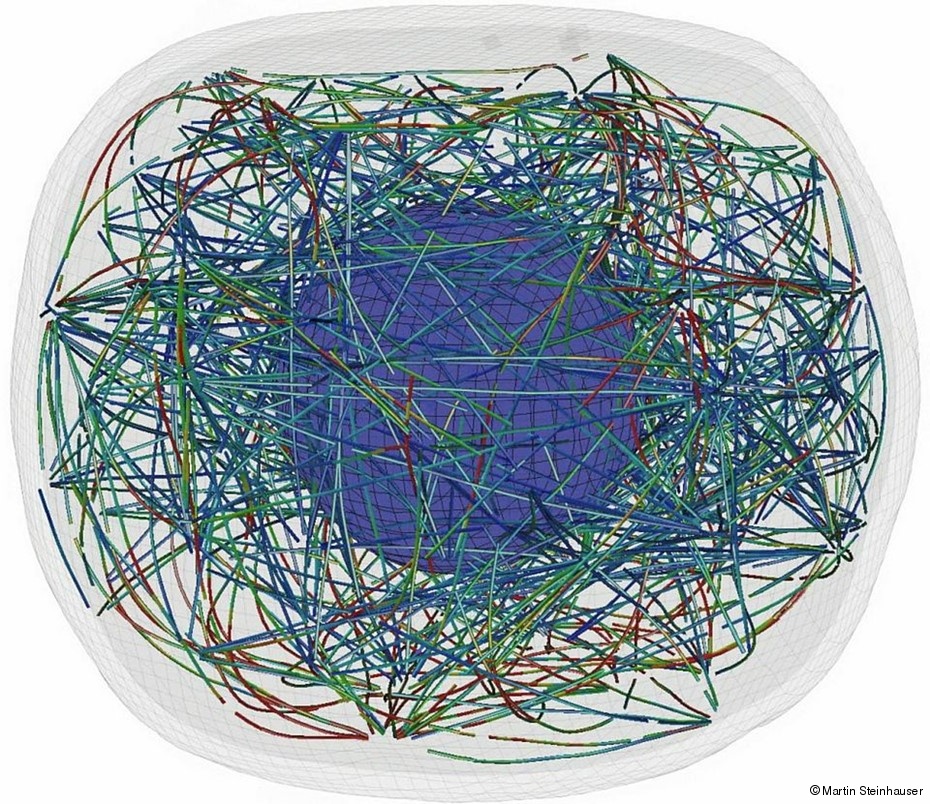

An efficient modeling of the system under investigation therefore requires special simulation techniques which are adopted to the respective relevant length- and timescales. In our research we use state-of-the-art implementations of algorithms in programs on supercomputers and an example is given in Figure 1. The video displays the simulation of rupture of a model of an eukariotic biological cell using our new multiscale modeling scheme described elsewhere. The figure on top shows a snapshot of a complex model of a biological cell which is put under pressure - in a real experiment, this could be, e.g. the tip of a cantilever in a AFM single cell experiment.

Selected publications

Computer Simulation in Physics and Engineering

M.O. Steinhauser

deGruyter, Leipzig, Berlin, Boston, 2013